Introduction#

To represent real world (stochastic system, systems with random disturbances, e.g. pedestrians walking onto the self driving car while it’s going to park spot), we need to define constraints in a way to formally explain our world (Borel-measurability - ensures that the system’s state space, control action space, and disturbance space are well-defined and can be analyzed using rigorous mathematical tools.).

This is not directly related to the raw implementation (architecture of NN, which programming language etc.) . Those are constraints to have if you want to create a system that is verified, safe and able to analyze. This approach works on all networks as long as the activation function (softplus) is continuous. Also there is the discretization that reduces the problem to a finite set of points, making it computationally feasible to analyze and verify the system’s behavior.

Concept#

We do not want rapid changes in the system, which are essential for stability and predictability (e.g., to prevent excessive acceleration that might compromise the car’s ability to stop in case of uncertainty). To ensure this, the system is assumed to be Lipschitz continuous, meaning that the rate of change of the system’s state is bounded by a Lipschitz constant.

The primary objective is to ensure that the system, under the learned control policy, satisfies both (formally RASMs) reachability (reaching a target set, formally RSMs) and safety (avoiding unsafe regions, formally SBFs) properties with a specified probability.

In the context of a self-driving car, the agent (car) knows its starting point, which is the initial state, and identifies unsafe states, such as obstacles, as well as the target state, which is the parking spot.

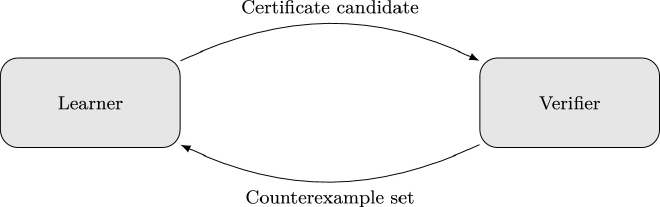

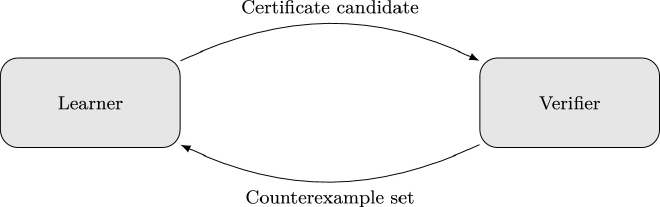

Learning and Verification#

There are 2 Neural Networks, Parallel trained: Learning and

Verification, and control policy which dictate the way both neural

networks learn and operate (determines the paths the car takes to

navigate the environment).

Learning#

Learning a neural network control policy(how the car should move) and a neural network certificate function(the mathematical guarantee). The learner minimizes a loss function that encodes the desired properties (reachability, safety, and Lipschitz continuity). Loss function includes:

Expected Decrease Loss: Ensures that the certificate function decreases in expectation over time (RASMs, RSMs, SBFs, LexRSMs).

Unsafe Loss: Ensures that certificate function is high in unsafe regions.

Initial Loss: Ensures that the certificate function is low in the initial state.

Lipschitz Regularization: Ensures that the control policy and certificate function do not change too rapidly.

Verification#

Verification formally verifies whether the learned control policy and certificate function satisfy the desired properties. The verifier uses interval arithmetic abstract interpretation to compute bounds on the expected values of the neural network functions and checks the conditions for the certificate functions. If the verifier finds that the learned policy or certificate function does not satisfy the desired properties, it generates counterexamples (e.g., states where the car might collide with an obstacle) and passes them back to the learner for further refinement.

References#

https://link.springer.com/chapter/10.1007/978-3-031-30823-9_1